Ondrej Texler

Principal Research Scientist Valka.ai

PhD graduate CTU in Prague

"I'd rather be anything but ordinary, please" —Avril Lavigne

Principal Research Scientist Valka.ai

PhD graduate CTU in Prague

"I'd rather be anything but ordinary, please" —Avril Lavigne

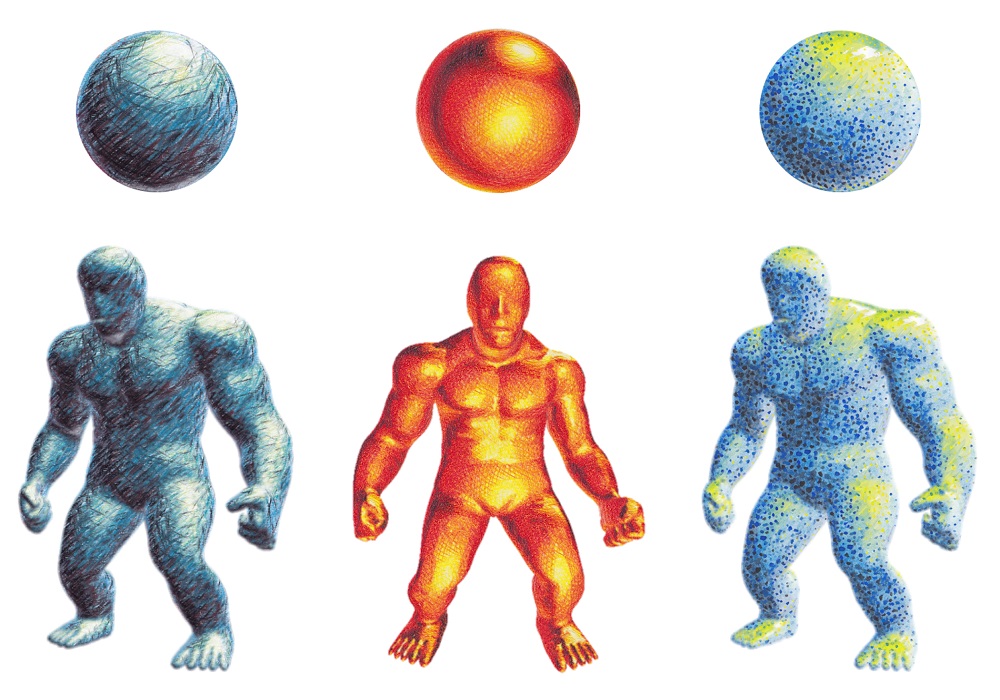

My entire research career has been revolving around generating realistically looking content given certain conditions, ranging from painterly images, animated stylized videos, to expressive photorealistic avatars with believable and controllable motion. I hold PhD in Computer Graphics, published over 10 papers at top venues such as CVPR or SIGGRAPH, have been cited over 300 times, have GitHub repositories with over 700 stars and 100 forks, co-invented 9 patents, created proof-of-concepts helping to raise multi-million seed round, shipped and released computer vision models and novel rendering pipelines unlocking millions of dollars in ARR, founded and led research teams, peer-reviewed tens of papers, won prizes such as the Best in Show Award at Real-Time Live SIGGRAPH or Joseph Fourier Prize, and gave invited talks and interviews at SIGGRAPH Now, ECCV, or BBC News.

Now, as a Principal Research Scientist at Valka.ai I manage and tech-lead the core video research team of 10+ research scientists and several university collaborations. Focusing on photorealistic rendering of human faces, hands, and bodies via neural rendering techniques including DiT, Gaussian Splatting, and GANs; conducting research into human hand motion modeling and face expression modeling.

D. Kunz, O. Texler, D. Mould, and D. Sýkora

IEEE Computer Graphics and Applications, CG&A Workshop at SIGGRAPH Asia 2025

Y. Wang, I. Molodetskikh, O. Texler, and D. Dinev

arXiv pre-print, 2025

S. Ravichandran, O. Texler, D. Dinev, and HJ. Kang

IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023

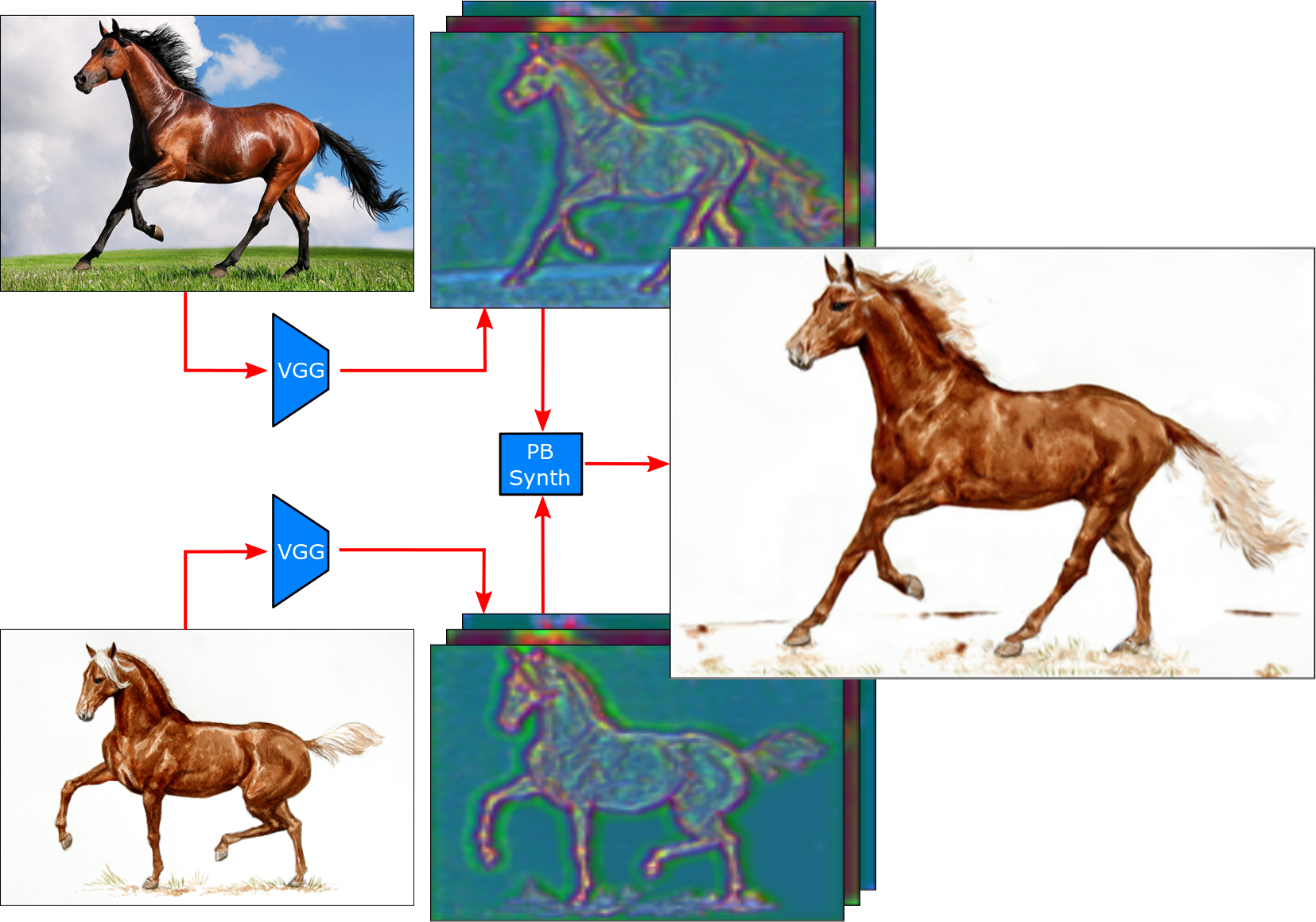

A. Texler, O. Texler, M. Kučera, M. Chai, and D. Sýkora

In Proceedings of the ACM in Computer Graphics and Interactive Techniques, 4(1), 2021 (I3D 2021)

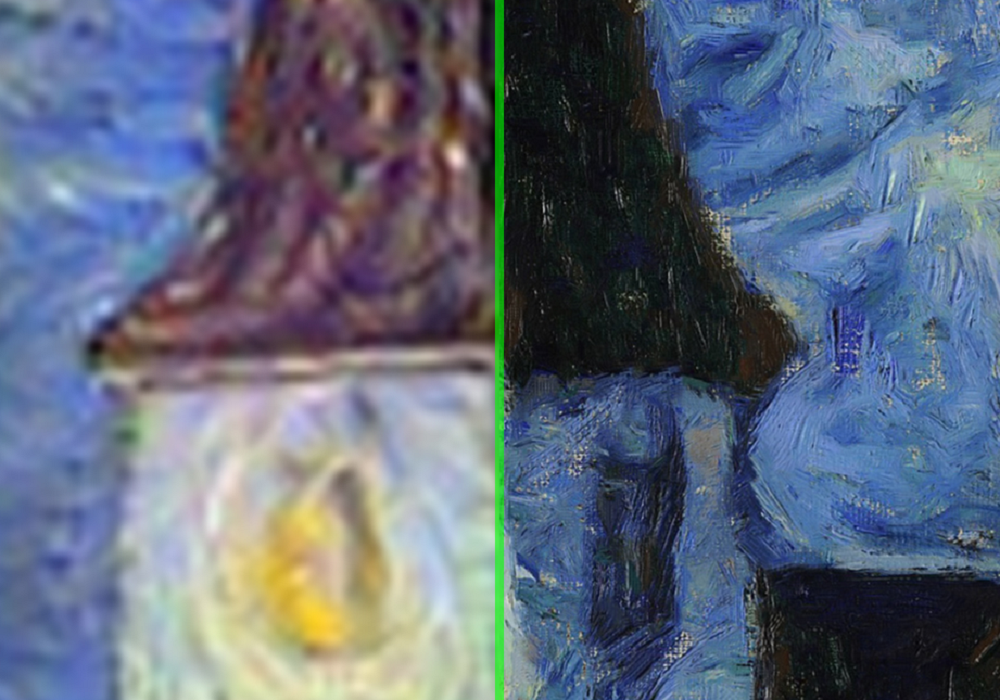

F. Hauptfleisch, O. Texler, A. Texler, J. Křivánek, and D. Sýkora

In Computer Graphics Forum 39(7):575-586 (PacificGraphics 2020)

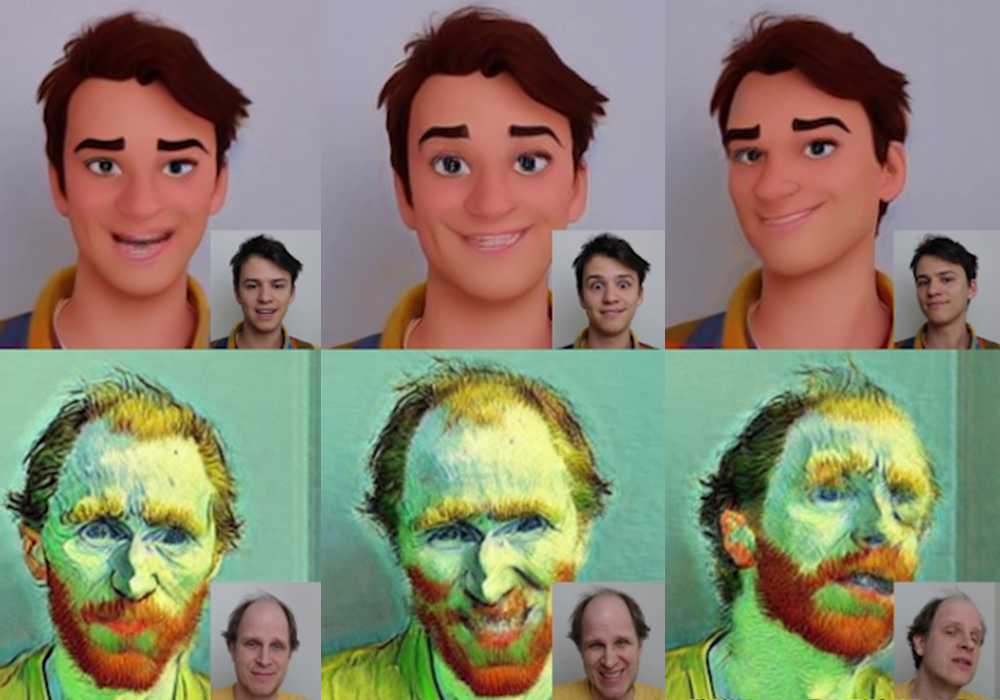

O. Texler, D. Futschik, M. Kučera, O. Jamriška, Š. Sochorová, M. Chai, S. Tulyakov, and D. Sýkora

In ACM Transactions on Graphics 39(4):73 (SIGGRAPH 2020), Best in Show Award at SIGGRAPH Real-Time Live!

O. Texler, D. Futschik, J. Fišer, M. Lukáč, J. Lu, E. Shechtman, and D. Sýkora

In Computers & Graphics 87:62-71 (January 2020)

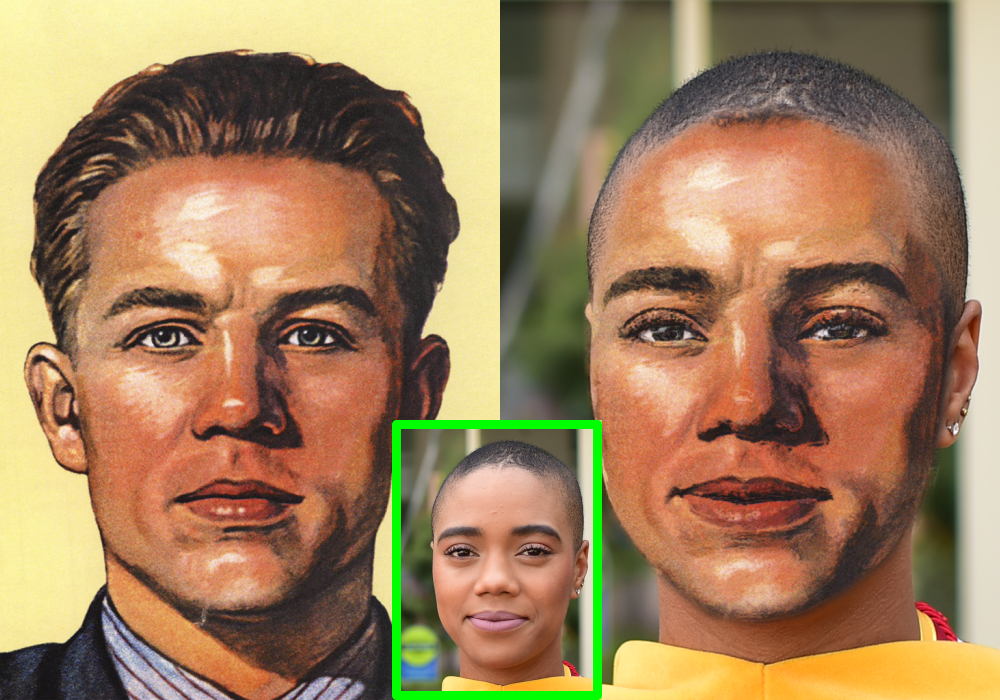

O. Jamriška, Š. Sochorová, O. Texler, M. Lukáč, J. Fišer, J. Lu, E. Shechtman, and D. Sýkora

In ACM Transactions on Graphics 38(4):107 (SIGGRAPH 2019, Los Angeles, California, July 2019)

O. Texler, J. Fišer, M. Lukáč, J. Lu, E. Shechtman, and D. Sýkora

In Proceedings of the 8th ACM/EG Expressive Symposium, pp. 43-50 (Expressive 2019, Genoa, Italy, May 2019)

D. Sýkora, O. Jamriška, O. Texler, J. Fišer, M. Lukáč, J. Lu and E. Shechtman

In Computer Graphics Forum 38(2):83-91 (Eurographics 2019, Genoa, Italy, May 2019)

O. Texler and D. Sýkora

In Proceedings of the 22nd Central European Seminar on Computer Graphics. (CESCG 2018, Smolenice, Slovakia, 2018)

Computer Graphics,

FEE, CTU in Prague.

Computer Science,

FIT, CTU in Prague.

Computer Science,

FIT, CTU in Prague.

Mathematics, Physics, and Descriptive Geometry, Gymnasium of Christian Doppler.