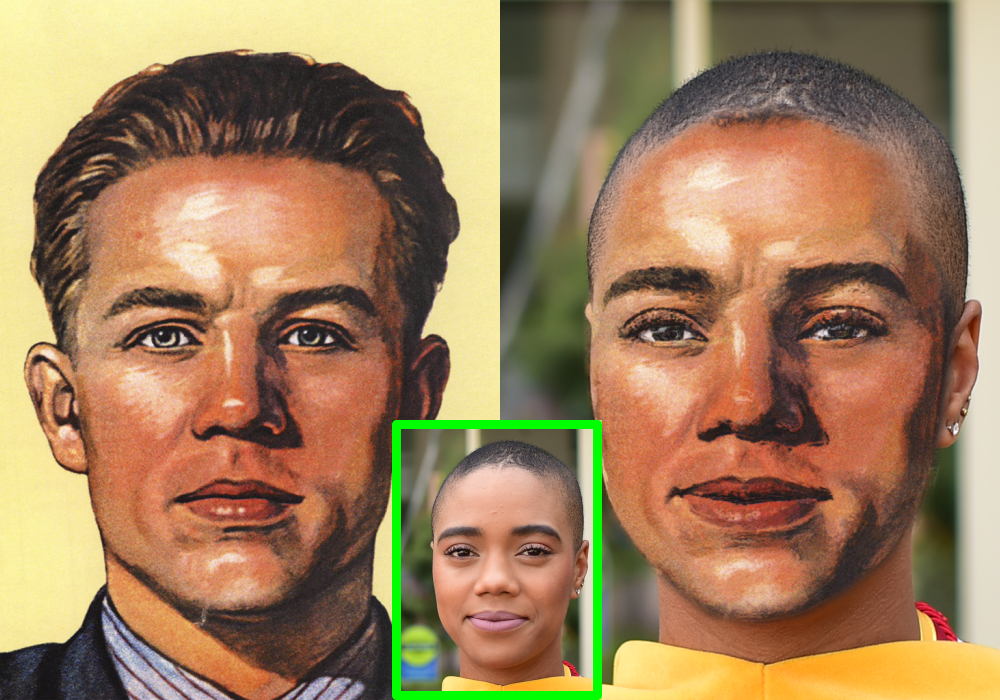

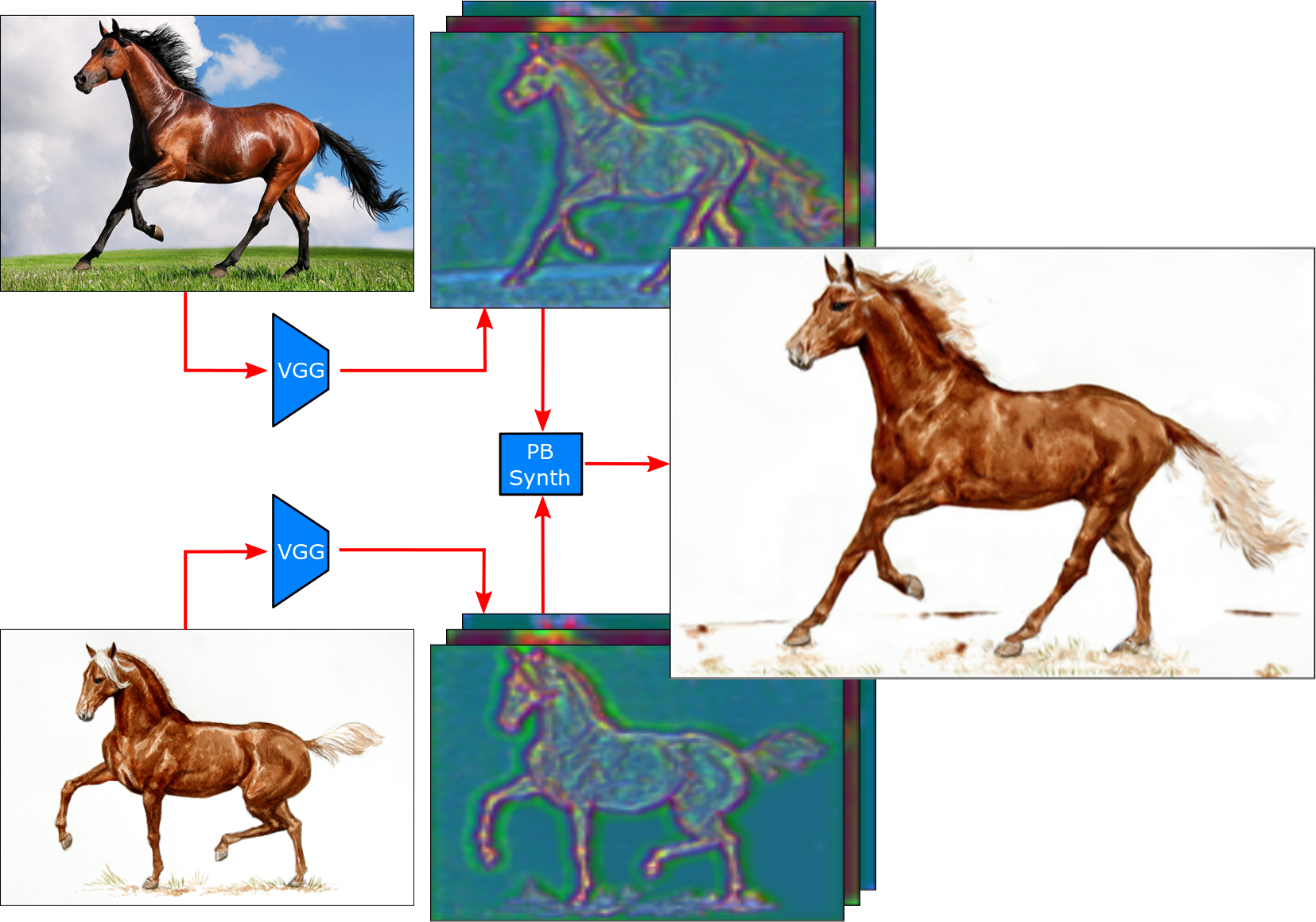

I am a GenAI Researcher at HeyGen where I focus on research and applications of generative video models for content creation. I obtained my PhD in Computer Graphics at CTU in Prague under the supervision of prof. Daniel Sýkora. I hold BSc and MSc degrees in Computer Science from the same university. My entire research career has been revolving around generating realistically looking content given certain conditions. During my PhD I focused on research into style transfer -- generating realistically looking paintings and animated movies. I was fortunate to do two internships at Adobe Research, one at Snap Research, and one at Samsung Research America; which resulted in 8 publications and winning the Best in Show Award at Real-Time Live at SIGGRAPH 2020. Upon completing my PhD, I joined NEON, Samsung Research America as a senior research scientist where I spent more than 2 years focusing my research into computer vision techniques to generate virtual humans with a particular emphasis on photorealism, resulting in 9 patents and CVPR publication. Then I joined Drip Artificial as a founding research scientist to lead the research efforts into generative AI, diffusion models, synthesizing stylized videos given a text description, and propagating edits through the video.

Diffusion and generative models to synthesize stylized videos given a text description; including style transfer, video synthesis, propagating edits through the video sequence, implicit neural video representations.

S. Ravichandran, O. Texler, D. Dinev, and HJ. Kang

IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023

A. Texler, O. Texler, M. Kučera, M. Chai, and D. Sýkora

In Proceedings of the ACM in Computer Graphics and Interactive Techniques, 4(1), 2021 (I3D 2021)

F. Hauptfleisch, O. Texler, A. Texler, J. Křivánek, and D. Sýkora

In Computer Graphics Forum 39(7):575-586 (PacificGraphics 2020)

O. Texler, D. Futschik, M. Kučera, O. Jamriška, Š. Sochorová, M. Chai, S. Tulyakov, and D. Sýkora

In ACM Transactions on Graphics 39(4):73 (SIGGRAPH 2020), Best in Show Award at SIGGRAPH Real-Time Live!

O. Texler, D. Futschik, J. Fišer, M. Lukáč, J. Lu, E. Shechtman, and D. Sýkora

In Computers & Graphics 87:62-71 (January 2020)

O. Jamriška, Š. Sochorová, O. Texler, M. Lukáč, J. Fišer, J. Lu, E. Shechtman, and D. Sýkora

In ACM Transactions on Graphics 38(4):107 (SIGGRAPH 2019, Los Angeles, California, July 2019)

O. Texler, J. Fišer, M. Lukáč, J. Lu, E. Shechtman, and D. Sýkora

In Proceedings of the 8th ACM/EG Expressive Symposium, pp. 43-50 (Expressive 2019, Genoa, Italy, May 2019)

D. Sýkora, O. Jamriška, O. Texler, J. Fišer, M. Lukáč, J. Lu and E. Shechtman

In Computer Graphics Forum 38(2):83-91 (Eurographics 2019, Genoa, Italy, May 2019)

O. Texler and D. Sýkora

In Proceedings of the 22nd Central European Seminar on Computer Graphics. (CESCG 2018, Smolenice, Slovakia, 2018)

Computer Graphics,

FEE, CTU in Prague.

Dissertation Thesis: Example-based Style Transfer.

Computer Science,

FIT, CTU in Prague.

Master Thesis: Digital Image Processing and Image Stylization.

Computer Science,

FIT, CTU in Prague.

Bachelor Thesis: Architecture design and implementation of a large software system.

Mathematics, Physics, and Descriptive Geometry specialization, Gymnasium of Christian Doppler.